Validity and soundness in scientific software

Wednesday, November 4, 2009

Privilege

CSER poster session

Monday, November 2, 2009

My poster was in the form of nine "slides". Here they are, with a bit of explanation about each of the slides.

| My study is, as you know, still underway. What I'm presenting here are the method and some preliminary results. I wanted to present at CSER because I wanted to hear what other people would say about some of my findings so far, and whether anyone would have suggestions of where to go next. |

| As a motivation for my study, consider how the computational scientist qua climatologist goes about trying to learn about the climate. In order to test their theories of the climate, they would like to run experiments. Since they cannot run experiments on the climate they instead build a computer simulation of the climate (a climate model) according to their theories and then run their experiments on the model. At every step there are approximations and error introduced. Moreover, the experiments that they run cannot all be replicated in the real world, so there is no "oracle" they can use to check their results against. (I've talked about this before.) All of this might lead you to ask ...Why do climate modelers trust their models? Or.. |

| ... for us as software researchers, we might ask: why do they trust their software? That is, irrespective of the validity of their theories, why do they trust their implementation of those theories in software? The second question should actually read "What does software quality mean to climate modelers*?" As I see it, you can try to answer the trust question by looking at the code or development practices, deciding if they are satisfactory and, if they are, concluding that the scientists trust their software because they are building it well and it it is, in some objective sense, of high quality. Or you can answer this questions by asking the scientists themselves why they trust their software -- what plays into their judgment of good quality software. In this case the emphasis in the question is slightly different, "Why do climate modelers trust their software?" The second, and to some extent third, research questions are aimed here. * Note how I alternate between using "climate scientist" and "climate modeler" to reference the same group of people. |

| My approach to answering these questions is to do a defect density analysis (I'm not sure why I called it "repository analysis" on my slides. Ignore that) of several climate models. Defect density is an intuitive and standard software engineering measure of software quality. The standard way to computer defect density is to count the number of reported defects for a release per thousand lines of code in that release. There are lots of problems with this measure, but one is that it is subject to how good the developers are at finding and reporting bugs. A more objective measure of quality may be their static fault density. So I did this type of analysis as well. Finally, I interviewed modelers to gather their stories of finding and fixing bugs as a way to understand their view and decision-making around software quality. There are five different modeling centres participating in various aspects of this study. |

| A very general definition of a defect is: anything worth fixing. Deciding what is worth fixing is left up to the people working with the model, so we can be sure we are only counting relevant defects. Many of the modeling centres I've been in contact with use some sort of bug tracking system. That makes counting defects easy enough (the assumption being that if there is a ticket written up about an issue, and the ticket is resolved, then it worth fixing and we'll call it a defect). Another way to identify defects is to look through the check-ins of the version control repository and decide if the check-in was a fix for a defect simply by looking at the comment itself. Sure, it's not perfect, but it might be a more reliable measure across modeling centres. |

| Presented here is the defect density for an arbitrary version of the model from each of the modeling centres. For persective, along the x-axis of the chart I've labeled two ranges "good" and "average" according to Norman Fenton's online book on software metrics. I've included a third bar, the middle one, that shows the defect density when you consider only those check-in comments which can be associated with tickets (i.e. there is a reference in the comment to a ticket marked as a defect). The top, "all defects", bar is the count of check in comments that look like defect fixes. I have included in the count all of the comments made 6 months before and after the release date. You can see that bar is divided into two parts. The left represents the pre-release defects, and the right represents the post-release defects. As yet, the main observation I have is that all of the models have a "low" defect density however you count defects (tickets, or check-in comments). It's also apparent that the modeling centres use their ticketing systems to varying degrees, as well as they have different habits about referencing tickets in their check-in comments. |

| I ran the FLINT tool over a single configuration of, currently only two, climate models. The major faults I've found are about implicit type conversion and declaration. As well, there are a significant (but small) portion of faults that suggest dead code. Of course, because I'm analysing only a single configuration of the model, I can't be sure that this code is really dead. I've inspected the code where some of these faults occur and I've found instances of both dead code and of code that isn't really dead in other configurations. One example of dead code I found came from a module that had a collection of functions to perform analysis on different array types. The analysis was simliar for each function, with a few changes to the function to handle the particularity of the array. The dead code found in this module was variables that were declared and set but never referenced. My guess from looking at the regularities in the code is that because the functions were so similar, the developers just wrote one function and then copied it several times and tweaked it for each array type. In the process they forgot to remove code that didn't apply. |

| Unfortunately, I have as yet only been able to interview a couple of modellers specifically about defects they have found and fixed. I have done a dozen or so interviews with modelers and other computational scientist to talk about how they do their development and software quality in general. So this part of the study is still a little lightweight, and very preliminary. In any case, when I've done the interviews I ask the modelers to go through a couple of bugs that they've found and fixed. I roughly asked them these questions. Everyone I've talked to is quite aware that their models have bugs. This, they accept as a fact of life. Partly this is a comment on the nature of a theory being an approximation, but they also include software bugs here too. Interestingly, they still believe that, depending on the bug, they can extract useful science from the model. One interviewee described how in the past, when computer time was more costly, if scientists found bugs part way through a 6 month model run they might let the run continue, publish the results but include a note about the bug they found and analysis about its effect. |

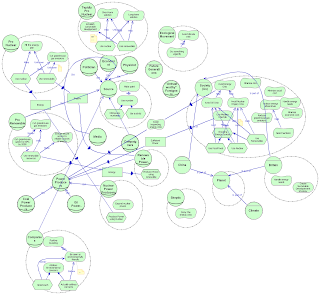

| The other observation I have is connected the last statement on the previous slide, as well as this slide. Once the code has reached a certain level of stability, but before the code is frozen for a release of the model, scientists in the group will being to run in-depth analysis on it. Both bug fixes and feature additions are code changes that have the potential to change the behaviour of the model, and so invalidate the analysis that has already been done on the model. This is why I say that some bugs can be treated as "features" of a sort: just an idiosyncracy of the model. Similarily, a new feature might be rejected as a "bug" if it's introduced too late in the game. In general, the criticality of a defect is in part judged on when it is found (like any other software project I suppose). I've identified several factors that I've heard the modellers talk about when they consider how important a defect is. I've roughly categorised these factors into three groups: concerns that depend on the project timeline (momentum), concerns arising from high-level design and funding goals (design/funding), and the more immediate day-to-day concerns of running the model (operational). Very generally, these concerns have more weight at different stages in the development cycle which I tried to represent on the chart. Describing these concerns in detail probably involves a separate blog post. |

Morning discussion for the WSRCC

Monday, October 26, 2009

- Drop out of research. We recognise climate change is an urgent problem and that many scientific research projects have very indirect, uncertain, and long-term payoffs. For the most part, the problem of climate change is fairly well analysed and many solutions are known, but in need of political organisation in order to carry them out. Perhaps really what is needed is for more people to "roll up their sleeves" and join a movement or organisation that's fighting towards this.

- Engage in action research/participatory research. If you decide to stay in research then we propose that you ground your studies by working on problems that you can be sure real stakeholders have. In particular, we suggest that you start with a stakeholder that is directly involved in solving the problem (e.g. activists, scientists, journalists, politicians) and that you work with throughout your study. At the most basic level, they act as a reality-check for your ideas, but we think that the best way to make this relationship work is through action research: joining their organisation to solve their problems, becoming directly involved in the solutions yourself. Finding publishable results is an added bonus which is secondary to the pressing need.

- Elicit the requirements of real world stakeholders. As you can see from the last point, we're concerned that as software researchers we lack a good understanding of the problems holding us (society) back from dealing with climate change effectively. So, we suggest a specific research project that surveys all the actors to figure out their needs and the place the software research can contribute. This project would involve interviewing activists, scientists, journalists, politicians, and citizens to build a research roadmap.

- Green metrics: dealing with accountability in a carbon market. This idea is more vague, but simply a pointer to an area where we think software research may have some applicability. Assuming there is a compliance requirement for greenhouse gas pollution (e.g. a cap and trade system), then we will need to be able to accurately measure carbon emissions on all levels: from industry to homes.

- Software for emergencies. Like the last point, this is one rather vague. The idea is this: in doomsday future scenarios of climate change, the world is not a peaceful place. Potentially more decision-making is done by people in emergency situations. This context shift might change the rules for interface design: where say, in peacetime, a user might be unwilling to double-click on a link, or might be willing to spend time browsing menus, but in a disaster scenario their preferences may change. So, how exactly does a user's preferences change in an emergency, and how might we design software to adjust to them?

- Make video-conferencing actually easy. This was our experience all through the day:

If we ever want to maintain our personal connections without traveling we need to solve this problem. You'd think that we had already solved it, as we have the basic technology already in place. We have Skype, it is just too flakey for relying on for important gatherings. Or, maybe, hotels and conference centres can't deal with the bandwidth demands. Or, maybe conference organisers don't make remote attendance a priority.

If we ever want to maintain our personal connections without traveling we need to solve this problem. You'd think that we had already solved it, as we have the basic technology already in place. We have Skype, it is just too flakey for relying on for important gatherings. Or, maybe, hotels and conference centres can't deal with the bandwidth demands. Or, maybe conference organisers don't make remote attendance a priority.

Even getting us through the basic technological obstacles may not be enough for a rich conference participation. Simply having a video and audio feed doesn't compare to face-to-face conversations. Maybe it never will, but certainly we can do better?

Position papers from the 1st Intl. Workshop on Software Research and Climate Change

Sunday, October 25, 2009

To begin, and as a refresher, I thought I'd post a single sentence summary of each of the position papers submitted for this workshop. Position papers were solicited from participants and were to respond to the challenge stated on the opening page of the workshop. In summary, the challenge is: how do we apply our expertise in software research to save our butts from certain destruction due to climate collapse. Or, as Steve puts it, "how can we apply our research strengths to make significant contributions to the problems of mitigation and adaptation of climate change."

In answer to that challenge, the position papers suggest software research should...

"Data Centres vs. Community Clouds", Gerard Briscoe and Ruzanna Chitchya

... tackle the energy inefficiency of cloud computing by investigating decentralised models where consumer machines also become providers and coordinators of computing resources.

"Optimizing Energy Consumption in Software Intensive systems", Arjan de Roo, Hasan Sozer and Mehmet Aksit

... provide the tools and design patterns for building software systems that meet both their energy-consumption requirements and their functional design requirements.

"Modeling for Intermodal Freight Transportation Policy Analysis", J. Scott Hawker

... improve three aspects of decision-making tools (like, say, an intermodal freight transportation policy analysis model): make them easier to use and interact with (HCI-wise); deal with the complexity of the models and the troubles with integrating various existing implementations; as well as (my favourite), make sure the software is built well since most of the folks doing the building are not trained.

"Computing Education with a Cause", Lisa Jamba

... investigate how to involve computer science students in research "toward improving health outcomes related to climate change" as part of the university curriculum.

"Some Thoughts on Climate Change and Software Engineering Research", Lin Liu, He Zhang, and Sheikh Iqbal Ahamed

... investigate how to navigate and integrate knowledge from many different disciplines and perspectives so as to help people communicate and work together; build decision-support, analysis and educational tools for people, companies, and government; build tools for incorporating environmental non-functional requirements into software construction.

"Refactoring Infrastructure: Reducing emissions and energy one step at a time", Chris Parnin and Carsten Görg.

... use insights from software refactoring to develop refactoring techniques for physical infrastructure (energy grid, water supply, etc.).

"In search for green metrics", Juha Taina and Pietu Pohjalainen

... establish a "framework for estimating or measuring the effects of a software systems' effect on climate change."

"Enabling Climate Scientists to Access Observational Data", David Woollard, Chris Mattmann, Amy Braverman, Rob Raskin, and Dan Crichton

... build systems to help climate scientists locate, transfer, and transform observational data from disparate sources.

"Context-aware Resource Sharing for People-centric Sensing", Jorge Vallejos, Matthias Stevens, Ellie D’Hondt, Nicolas Maisonneuve, Wolfgang De Meuter, Theo D’Hondt, and Luc Steels.

... investigate how to use our everyday hand-held devices as sensors to provide fine-grained environmental data.

"Language and Library Support for Climate Data Applications", Eric Van Wyk, Vipin Kumar, Michael Steinbach, Shyam Boriah, and Alok Choudhary

... build language extensions and libraries to make climate data analysis easier and more computationally efficient.

Modeling the solutions to climate change

Tuesday, October 20, 2009

Geoscientific Model Development

Sunday, October 18, 2009

Talk: Climate Change & Psychological Barriers to Change

Tuesday, September 22, 2009

This week is Earthcycle at U of T: an environment week with many many great happenings (see the link for more info). In particular there is what looks to be a great lecture on Thursday discussing the recent report on psychology and climate change from the American Psychological Association.

Here's the full posting:

Thurs. Sept. 24

7:00 p.m. – 9:00 p.m.

Lecture Climate Change & Psychological Barriers to Change, with Dr. Judith Deutsch ( Science for Peace) & Prof. Danny Harvey ( U of T)

International Student Centre, Cumberland Room

33 St. George Street

This is, in part, a summary of a major conference by the Report by the American Psychological Association’s Task Force on the Interface Between Psychology and Global Climate Change titled “Psychology and Global Climate Change: Addressing a Multi-faceted Phenomenon and Set of Challenges.”

The study includes sections on concern for climate change, not feeling at risk, discounting the future, ethical concerns, population issues, consumption drivers, counter-consumerism movements, psychosocial and mental health impacts of climate change, mental health issues associated with natural and technological disasters, lessons from Hurricane Katrina, uncertainty and despair, numbness or apathy, guilt regarding environmental issues, heat and violence, displacement and relocation, social justice implications, media representations, anxiety, psychological benefits associated with responding to climate change, types of coping responses, denial, judgmental discounting, tokenism and the rebound effect, and belief in solutions outside of human control.

A copy of the report is available at http://www.apa.org/science

Dr. Judith Deutsch is a psychiatric social worker and President of Science for Peace.

Prof. Danny Harvey is with the Geography Department at UofT, a member of the IPCC, and an internationally renowned climate change expert.

Organized by Science for Peace

Facebook event page:

http://www.facebook.com/event.php?eid=131392308428&index=1

On static analysis

Monday, August 31, 2009

Abstract of my study for the AGU

Here's the current draft of the abstract. I've found it a little tricky to write an abstract for work that I haven't yet completed but I've given it a go. I've gotten some excellent feedback from some of my colleagues (big up to: Steve, Neil, Jono, and Jorge) as to how to frame the problem and my "results" (in quotations because I don't yet have concrete results).

Feedback on clarity, wording, grammar, framing of the problem and results, etc... are very much welcome.On the software quality of climate models

A climate model is an executable theory of the climate; the model encapsulates climatological theories in software so that they can be simulated and their implications investigated directly. Thus, in order to trust a climate model one must trust that the software it is built from is robust. Our study explores the nature of software quality in the context of climate modelling: How do we characterise and assess the quality of climate modelling software? We use two major research strategies: (1) analysis of defect densities -- an established software engineering technique for studying software quality -- of leading global climate models and (2) semi-structured interviews with researchers from several climate modelling centres. We collected our defect data from bug tracking systems, version control repository comments, and from static analysis of the source code. As a result of our analysis, we characterise common defect types found in climate model software and we identify the software quality factors that are relevant for climate scientists. We also provide a roadmap to achieve proper benchmarks for climate model software quality, and we discuss the implications of our findings for the assessment of climate model software trustworthiness.

Workshops at PowerShift Canada

Monday, August 17, 2009

- A climate science backgrounder

- Climate modelling 101

- An insider's perspective on the IPCC

- Communicating the science of climate change

- Developing Canada's GHG inventory

Counting lines of code

| Lines | Type | Physical Line? | Logical Line? |

| !! this is a comment | comm | no | no |

| blank | no | no | |

| #if defined foo | exec | yes | yes |

| #ifdef key_squares | exec | yes | yes |

| #include "SetNumberofcells.h" | comp | yes | yes |

| #else | exec | yes | no |

| #endif | exec | yes | yes |

| SUBROUTINE A(Sqr_Grid) | decl | yes | yes |

| USE Sqr_Type | exec | yes | no |

| IMPLICIT NONE | decl | yes | yes |

| IF (assoc(cur_grid)) THEN | exec | yes | yes |

| Type(grid), Pointer :: Sqr_Grid | decl | yes | yes |

| WRITE(*,*) & | exec | yes | no |

| 'Hello' | exec | yes | yes |

| ENDIF | exec | yes | yes |

| END SUBROUTINE A | data | yes | no |

The physical line count is just a count of non-blank, non-comment lines. The logical line count tries to be a bit smart by counting lines in more abstract terms (I imagine a philosopher-computer scientist in some windowed office somewhere chin-stroking and asking, "What is a line of code?"). Anyhow, CodeCount computes logical line count by ignoring lines with continuation characters (e.g. "&") and certain other statements (e.g. "USE", "CASE", "END IF", "ELSE") and by counting each statement in a multi-statement line as a separate line. The full specification is in the CodeCount source if you're interested.

Some results from Forcheck

Friday, July 17, 2009

2x[ 84 I] no path to this statement

1564x[344 I] implicit conversion of constant (expression) to higher accuracy

635x[681 I] not used

265x[675 I] named constant not used

144x[323 I] variable unreferenced

144x[699 I] implicit conversion of real or complex to integer

125x[ 94 E] syntax error

108x[109 I] lexical token contains non-significant blank(s)

107x[319 W] not locally allocated, specify SAVE in the module to retain data

96x[557 I] dummy argument not used

78x[345 I] implicit conversion to less accurate data type

65x[316 W] not locally defined, specify SAVE in the module to retain data

65x[665 I] eq.or ineq. comparison of floating point data with constant

38x[313 I] possibly no value assigned to this variable

35x[342 I] eq.or ineq. comparison of floating point data with zero constant

34x[644 I] none of the entities, imported from the module, is used

27x[124 I] statement label unreferenced

27x[315 I] redefined before referenced

27x[341 I] eq. or ineq. comparison of floating point data with integer

22x[125 I] format statement unreferenced

21x[ 1 I] (MESSAGE LIMIT REACHED FOR THIS STATEMENT OR ARGUMENT LIST)

21x[514 E] subroutine/function conflict

19x[530 W] possible recursive reference

18x[674 I] procedure, program unit, or entry not referenced

18x[598 E] actual array or character variable shorter than dummy

10x[340 I] equality or inequality comparison of floating point data

8x[325 I] input variable unreferenced

7x[347 I] non-optimal explicit type conversion

7x[565 E] number of arguments inconsistent with specification

6x[582 E] data-type length inconsistent with specification

6x[668 I] possibly undefined: dummy argument not in entry argument list

5x[312 E] no value assigned to this variable

5x[691 I] data-type length inconsistent with specification

4x[556 I] argument unreferenced in statement function

4x[621 I] input/output dummy argument (possibly) not (re)defined

4x[384 I] truncation of character variable (expression)

3x[383 I] truncation of character constant (expression)

3x[343 I] implicit conversion of complex to scalar

3x[454 I] possible recursive I/O attempt

3x[570 E] type inconsistent with specification

2x[568 E] type inconsistent with first occurrence

2x[573 E] data type inconsistent with specification

2x[ 84 I] no path to this statement

2x[651 I] already imported from module

2x[617 I] conditionally referenced argument is not defined

2x[214 E] not saved

2x[236 E] storage allocation conflict due to multiple equivalences

2x[700 E] object undefined

2x[307 E] variable not defined

1x[115 E] multiple definition of statement label, this one ignored

1x[145 I] implicit conversion of scalar to complex

1x[228 W] size of common block inconsistent with first declaration

1x[230 I] list of objects in named COMMON inconsistent with first declaration

1x[250 I] when referencing modules implicit typing is potentially risky

1x[667 E] undefined: dummy argument not in entry argument list

1x[676 I] none of the objects of the common block is used

1x[616 E] input or input/output argument is not defined

number of error messages: 200

number of warnings: 192

number of informative messages: 3415

frac1=+1.

ccc !!! ground properties should be passed as formal parameters !!!k_ground is used later in the function, but c_ground never is.

k_ground = 3.4d0 !/* W K-1 m */ /* --??? */

c_ground = 1.d5 !/* J m-3 K-1 */ /* -- ??? */

QUESDEF.f: prather_limits = 0.I, of course, have no idea whether these cases are unintentional, or what the effects of these casts are. I would think that it is generally dangerous to cast unintentionally to a less precise number...

OCNFUNTAB.f: JS=SS

RADIATION.f: JMO=1+JJDAYS/30.5D0

In a fixed format source form blanks are not significant. However, a blank in a name, literal constant, operator, or keyword might indicate a syntax error.Okay, I still don't get it.. but that's because I'm at all familiar with Fortran (yet). Here's one example from RADIATION.f:

DATA PRS1/ 1.013D 03,9.040D 02,8.050D 02,7.150D 02,6.330D 02,Each value, "1.013D 03" for example, is flagged as an instance of this issue. In this particular case these are just ways of writing down Double-precision numbers, but normally (I guess?) you wouldn't see the space, but instead a + or - sign. There might be a forcheck compiler option I can flip that will ignore this particular type of issue. I tried looking for other, possibly more significant instances of this issue. I found many that were "just" because of line continuations. That is, a line was intentionally wrapped and so this appeared as a space at the end of the line and the start of the next. Here are a two examples:

1 5.590D 02,4.920D 02,4.320D 02,3.780D 02,3.290D 02,2.860D 02,

2 2.470D 02,2.130D 02,1.820D 02,1.560D 02,1.320D 02,1.110D 02,

3 9.370D 01,7.890D 01,6.660D 01,5.650D 01,4.800D 01,4.090D 01,

4 3.500D 01,3.000D 01,2.570D 01,1.220D 01,6.000D 00,3.050D 00,

5 1.590D 00,8.540D-01,5.790D-02,3.000D-04/

SEAICE.f: IF (ROICE.gt.0. and. MSI2.lt.AC2OIM) thenI had no idea that fortran has such awful syntax for logical expressions. Yes, you read it correctly, the greater-than operator is .gt. and so on. In any case, in the above example in SEAICE.f, the fact that the and-operator is written as ". and." rather than ".and." is what raises this issue. In the ODIAG_PRT.f file, it is the fact that there is a space in the representation of the double-precision number.

ODIAG_PRT.f: SCALEO(LN_MFLX) = 1.D- 6 / DTS