For the past couple of weeks a few of us in the software engineering group have been meeting to take up

Steve's modeling challenge: we are attempting to model (visually, not computationally) the proposed solutions from several popular books. The idea is to do so so that it's possible (easy?) to compare the differences and similarities between them.

Here is the homepage* for the project, which roughly tracks what we're up to. I'm going to summarise our progress so far.

In our first few meeting we decided to just "shoot first and ask questions later". That is to say, we just collaborative built up a model of the chapters on wind power as we saw fit in the moment, without following any visual syntax and without worrying too much about what to include or what to ignore. The result looked like this:

At the bottom of that picture is our brainstorming about what other aspects to include (the left hand column), the types of perspectives/analysis that McKay uses and that may be useful to include a future exercise (middle column), and the types of differences we expect to see when comparing models (right column).

The next step would have been to come up with the same sort of model for another book, and then start to figure out how best to make the models comparable so that it is visually easy to see the differences and similarities between the various models.

We didn't do that. Instead, we decided to try making a more principled model. Actually, set of models. We decided to construct an entity-relationship (ER) model, and a goal model (i*) for two books and then see about how to go about making those models comparable.

We began with the entity-relationship model. Again, for McKay's book. McKay's book is fairly well segmented into chapters that have back-of-the-envelope-style analysis and others that have a more broad discussion of the actors and issues. In our first attempt shown above, we mainly only modeled the two chapters on wind-power analysis. But if we just stuck to those chapters for the ER and goal models we'd be left with very impoverished models that miss all of the important contextual bits that frame the wind-power discussion. We relaxed the restriction on our wind-power focus slightly so as to include parts of the book that discuss the context. In the case of McKay's book, chapter one covers this nicely.

After our first few meetings we've completed the ER domain model, as well as made a good start on the goal model.

For the wider context (chapter one), we built the following ER model:

This model is a bit of a monster, but I'm told that most models are like that. Other than the standard UML relationship syntax, we have coloured the nodes to represent whether the concept comes from the book directly (blue), or whether we included it because we felt it was implied or simply helpful for clarity (yellow).

Using the same process we created the following ER model for just the two chapters on wind:

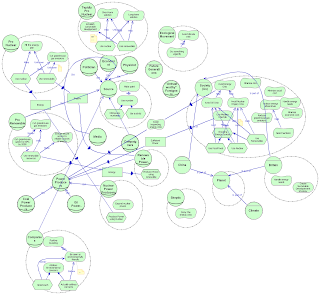

As well, we've begun to go back over the first chapter and build up an i* goal model. Here it is so far:

Stay tuned for further updates on what we're up to. I'd suggest that at the moment these models should simply be taken as our first hack. We haven't done any work whatsoever to make them very readable or comparable, for instance.

* I feel like "homepage" is a rather outdated word now. Is that so?